Digital Acts/Analog Chaos: The Future of Warfare

November 11, 2025

As the subject of AI becomes increasingly integrated into society, and the possibility of major tech breakthroughs remains on the horizon, one question remains: are we prepared for the inherent threats? Between high-profile hacks, cyber espionage and full-scale attacks that toe the line between modern and conventional, it’s clear that we are unable to prepare on all possible fronts. With the possible addition of Artificial Superintelligence (ASI), is there a chance that we’ve already run out of time? This article explores the possibilities, pitfalls and problems of the coming era, and what we might do about it.

Digital Acts/Analog Chaos: The Future of Warfare

By: Sabeen Rizvi

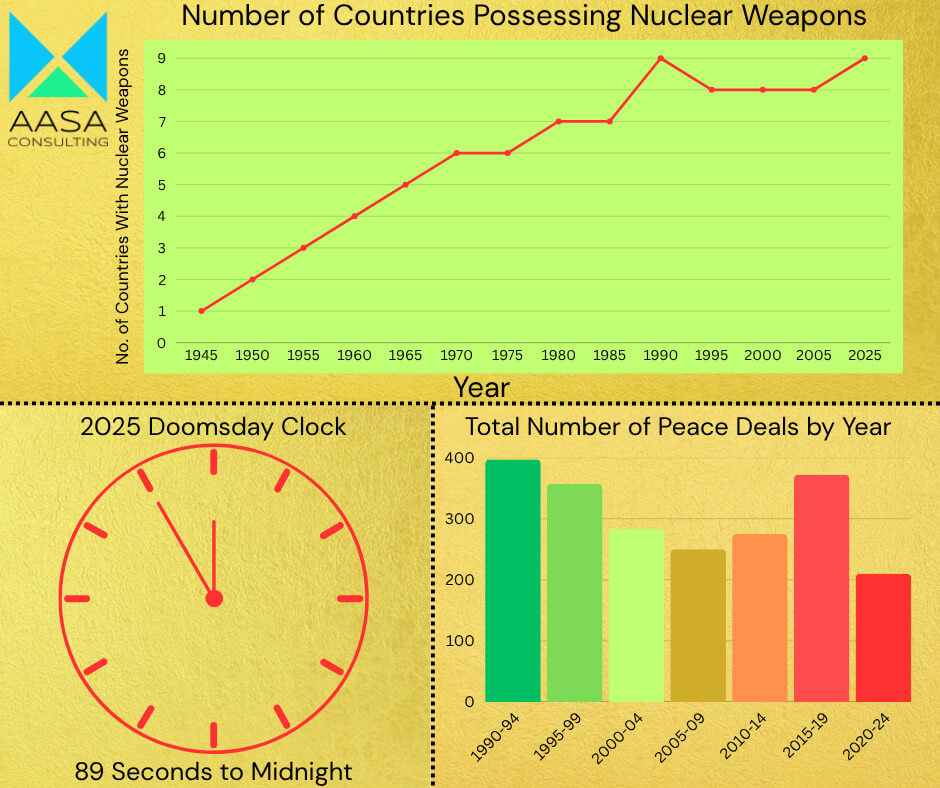

When it comes to the discussion of peace and diplomacy, no question casts quite as long a shadow over it as technology. Historically, the nation with the strongest weapons, whether they were cannons or newly-invented nuclear explosives, was at a massive advantage. In some cases, a military innovation was the deciding factor in a war, rendering all other weapons useless in a field of battle. Added to this, there was sabotage: supply line disruption, espionage, infrastructure sabotage. Now, imagine taking an advanced technology that can be used for militaristic purposes, and installing it into the key infrastructure across almost every country. Not only that, but that infrastructure becoming so valuable to the country that any disruption could result in both, economic losses and civilian deaths. It is a recipe for not only disaster, but long-term consequences reaching far beyond what can be prepared for by humanity. In the current situation, there are many issues that need to be considered.

A Rapidly Evolving (and Mutating) Danger

Cyber warfare has evolved from isolated acts of digital espionage into a persistent element of modern conflict and competition. Initially limited to data theft and website defacement, it now encompasses operations capable of disrupting entire systems and economies. Governments, private actors, and organized groups all play a role in this new domain, where attacks can originate from anywhere and attribution is often unclear, adding a layer to the problem. The blurred line between state involvement and independent action makes regulation and accountability especially difficult. Most nations now assume that they are, for the most part, in a state of constant digital vulnerability. As artificial intelligence becomes more integrated into defence and surveillance systems, it introduces new risks of autonomous decision-making and rapid escalation beyond human control.

Large-Scale Attacks as a Rule

Modern cyber operations target what societies depend on most, including power grids, communication systems, financial institutions, and essential government databases. Attacks may involve ransomware, denial of service campaigns, or the silent infiltration of networks to gather intelligence. The methods are constantly evolving, with even smaller operations having the potential to create wide-reaching disruption. This makes most attacks extremely hard for the modern individual and state to avoid. Digital systems being abandoned is too steep a cost, given the value they generate and their productivity increasing and at times life-saving functions. As digital dependency increases, so does exposure, and the challenge lies in protecting systems that were often not designed with resilience in mind. When these systems are set up, in the current model of capitalism, it is unclear if they will be scaled or become crucial to any vital functions in the future. Additionally, it saves money to ignore stringent safety protocols, with basic security (or even no security) being seen as sufficient. If the system does end up being scaled, security is improved, but too many corporations and even government bodies have shown a lack of initiative or foresight in this regard, meaning that multiple systems are likely deeply vulnerable. The growing use of AI-driven attacks, capable of learning and adapting in real time, adds another layer of complexity to these already fragile digital defences.

Legal Frameworks Playing Catch-Up

Efforts to regulate cyber warfare have lagged behind technological progress. While international law applies in principle, it is often ignored in practice. Evidence of this is so abundant that most assumer that a global, rules-based order is all but non-existent. The advent of digital landscapes further complicates enforcement, verification, and attribution, allowing it to be a particularly useful tool for attacks by powerful states looking to avoid being traced. Global initiatives through the United Nations and regional alliances have sought to establish norms for responsible state behaviour, but consensus remains limited. Many countries now view cyber capability as an integral part of national defence. As usual, this has led to an ongoing tension between security, sovereignty, and global cooperation. In the case of technology, this has even more severe consequences, particularly for individuals. Civilians, particularly the poorest and least powerful, pay the highest price for these attacks. As expected, there are no policies or broader frameworks that offer ensured, enforceable protection for these groups. The rise of AI-driven cyber tools challenges these frameworks even further, demanding urgent updates to laws and policies that were not built for machine-led conflict. It may even be that AI is used to create its own policies, having potentially harmful consequences in the future.

Another Crossroads with Global Stakes

We’ve seen this issue with climate change, global pandemics and even cross-border natural disasters: cooperation is never as smooth or sought-after as it should be, despite it benefiting all parties. As technology becomes more advanced and interconnected, the scope for both harm and cooperation expands. Artificial intelligence, possible strides in quantum computing, and the new threats posed by autonomous systems may redefine how cyber conflicts unfold. Each of these will raise new ethical and strategic questions. The future will likely depend less on who can launch the most sophisticated attacks and more on who can build the most resilient and transparent systems. Stability and mutual safety in cyberspace will require not only stronger defences but shared trust, something far harder to engineer than era-shifting technology it seems. If AI continues to evolve unchecked, it may shift the balance of power in cyberspace entirely, making foresight and regulation more critical than ever. This may be a boon, ushering an era of cooperation, mutual respect for sovereignty and possibly even better outcomes for citizens. However, given our current trajectory, it is more likely to become a catastrophe, with the race for AI fuelling what may eventually be the story of human extinction. As always, the choice is very much in our hands. Let’s hope we make the right one before those hands are replaced with sentient robotic arm.

Click to share

Recent Blogs

November 11, 2025

November 3, 2025

October 23, 2025